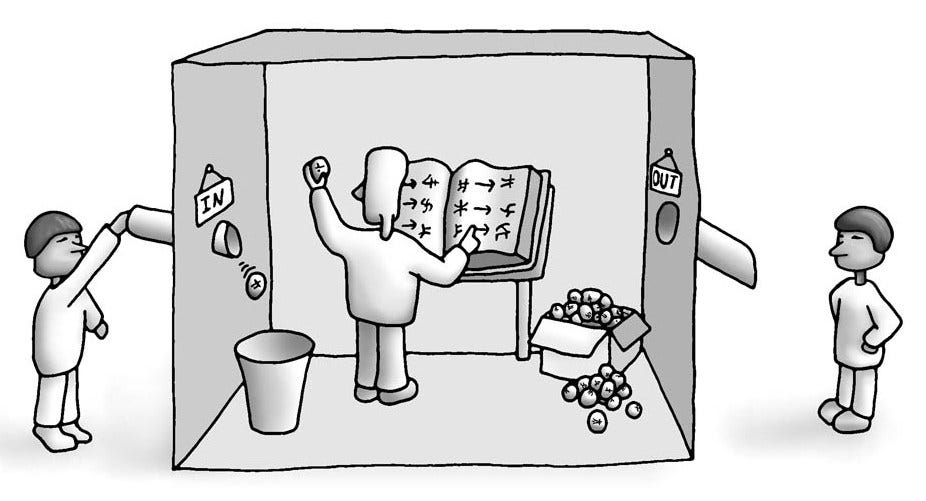

The Chinese Room is a thought experiment created by philosopher John Searle that goes as follows:

"Imagine a native English speaker who knows no Chinese locked in a room full of boxes of Chinese symbols together with a book of instructions for manipulating the symbols. Imagine that people outside the room send in other Chinese symbols which, unknown to the person in the room, are questions in Chinese. And imagine that by following the instructions in the program the man in the room is able to pass out Chinese symbols which are correct answers to the questions. The program enables the person in the room to pass the Turing Test for understanding Chinese but he does not understand a word of Chinese."1

The idea is that even though computers can process given information and return fitting responses, they will never have the sophistication necessary to truly understand meaning like a real human being. As a result, human minds must result from biological processes that cannot be engineered.

But the argument that computers can’t possess human minds is hard to make when science knows extremely little about consciousness. No one can explain why consciousness exists and how it works.

For a long time, scientists believed the development of consciousness in humans happened because emotions and feelings were necessary for their survival. When humans felt fear they would run away from predators and when they felt happy they would try to replicate the beneficial actions that yielded the sensation.

But as we learn more about brain activity, this theory starts to hold less weight. A more recent understanding of how humans make their decisions revolves around electrical signals being sent to and from neurons. When a human sees a predator, signals get sent from the eyes to neurons which in turn send signals to the legs, prompting them to run. So where does consciousness come in? We don’t really need to feel emotions if certain stimuli will compel us to perform corresponding actions anyway.2

As historian Yuval Noah Harari puts it (in the context of a man seeing and then running from a lion), “if the entire system works by electric signals passing from here to there, why the hell do we also need to feel fear?”2

But if we strip consciousness from the equation, computers could effectively simulate our minds. We would essentially function as programs that produce appropriate outputs in response to given inputs, a task computers are more than capable of performing. The only way that computers would be truly unable to simulate human minds, would be if they couldn’t replicate consciousness. But this is impossible to prove without a true understanding of what consciousness is.

A computer isn’t like a person in a room translating Chinese with a book of instructions, it’s more like a room filled with thousands of people, each responsible for translating different portions of the language. And amidst this chaos, no one can predict whether consciousness can form.

If some jumble of electric signals can create the subjective experience of consciousness why can’t another similarly organized jumble of electric signals produce the same effect? If the refutation of the possibility of a computer possessing a human-like mind relies on the fact that we can’t see how consciousness or human-like understanding could form in a computer, then we would have to deny the consciousness of humans for the same reason. We can’t explain how humans are conscious, and if someone were to create a computer in which a series of electrical signals gets passed from sensors (similar to eyes or ears) to objects capable of physical movement (similar to legs or arms), no one would believe that the contraption was conscious either.

Without an understanding of when and how consciousness emerges, we can’t rule out the potential of a computer to replicate it and, in turn, a human mind.

1. The quote is from John Searle's Minds, Brains and Programs.

2. Read Yuval Noah Harari's Homo Deus.